A Simple Way GitHub Copilot @vision Helped Me Be More Productive

Table of Contents

I am always on the lookout for ways to make my life easier with the help of computers, and one of the more recent things that I started using is really helping me in this domain - the @vision extension for GitHub Copilot. You can get it in the Visual Studio Marketplace.

In their own words, the developers of this extension say this:

Vision for Copilot Preview is an extension that enhances chat interactions by enabling users to leverage advanced vision capabilities. This preview feature allows users to attach images directly as contextual input, enriching conversations and enabling more dynamic, visually-supported responses. This extension will be eventually deprecated in favor of built-in image flow in Github Copilot Chat.

Exactly what I needed. The idea of it is fairy straightforward - within GitHub Copilot, you can use the @vision command along side some kind of visual (say, an architecture diagram, or a Seaborn plot someone generated) and GitHub Copilot can perform work on top of that image. I find it particularly useful to explain certain data that I might not have the source material for and then replicate the visualization in Python.

Let’s see how this works in action.

Setting up Visual Studio Code #

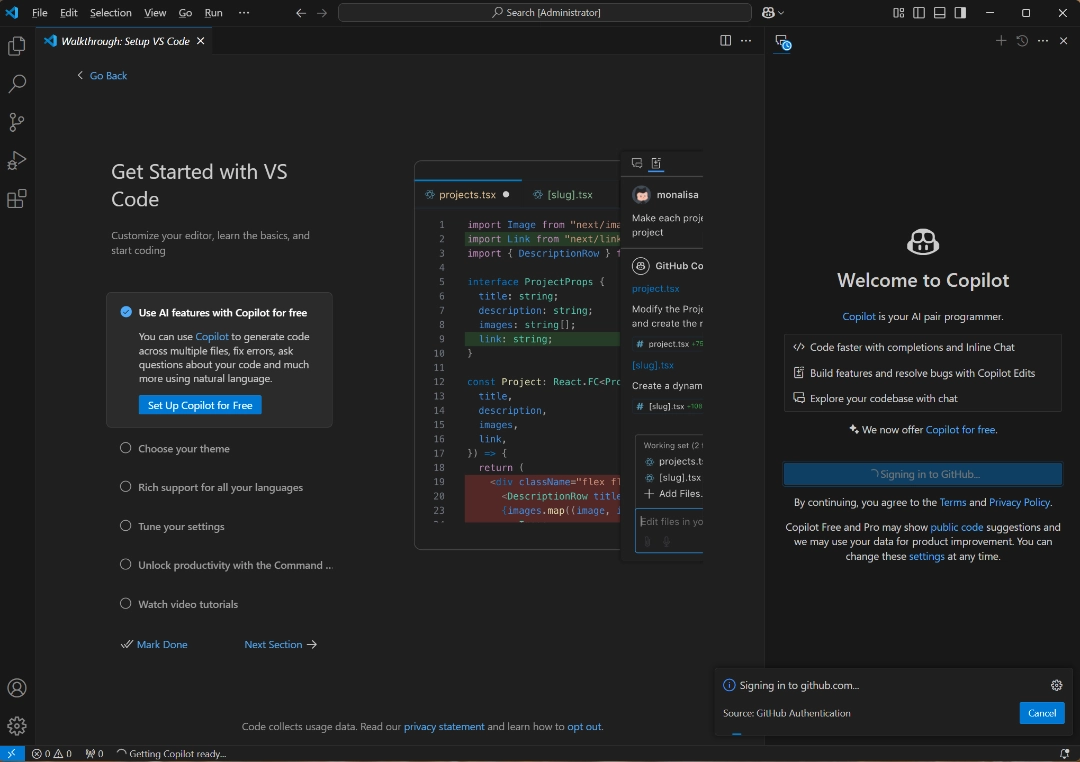

I am making the bold assumption here that you already installed Visual Studio Code. If you haven’t yet, you can download it for free.

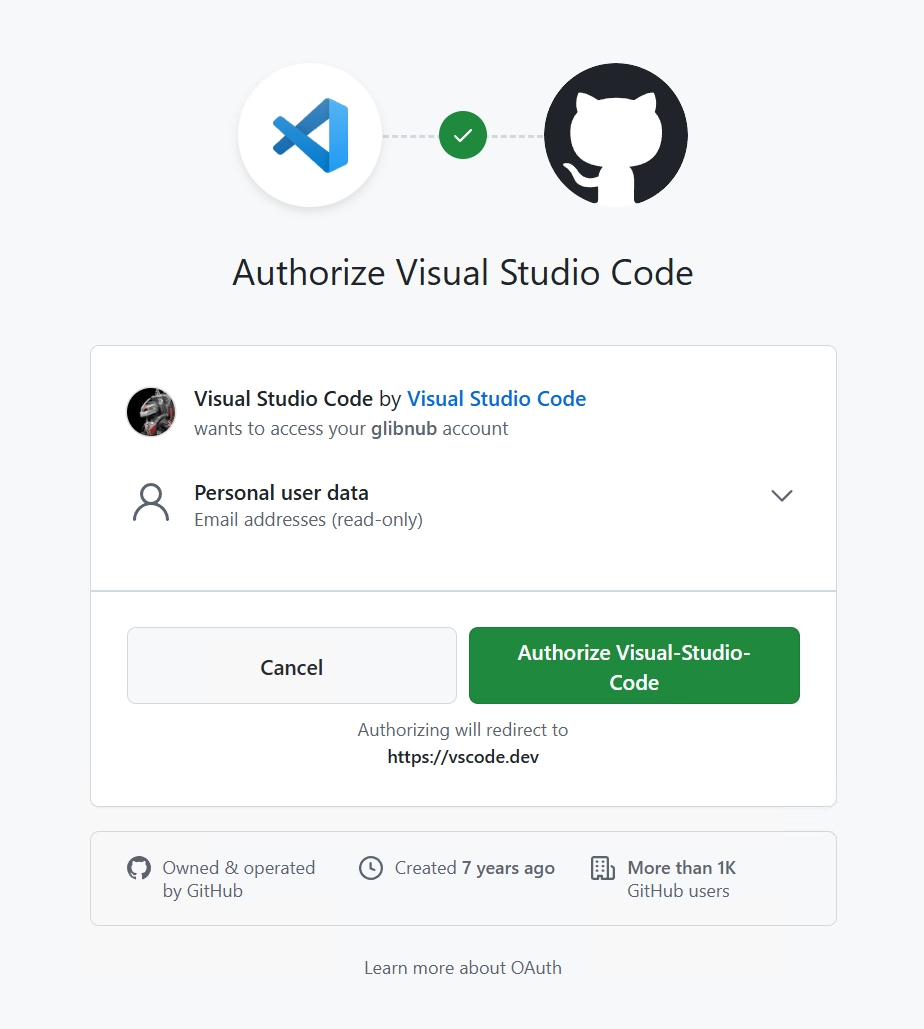

Inside Visual Studio Code, you will need to sign in with your GitHub account to use GitHub Copilot. And by the way, in case you missed it - everyone with a GitHub account gets GitHub Copilot entirely free.

If you haven’t signed in with that GitHub account into Visual Studio Code before, you might need to authorize it to access some of your account data.

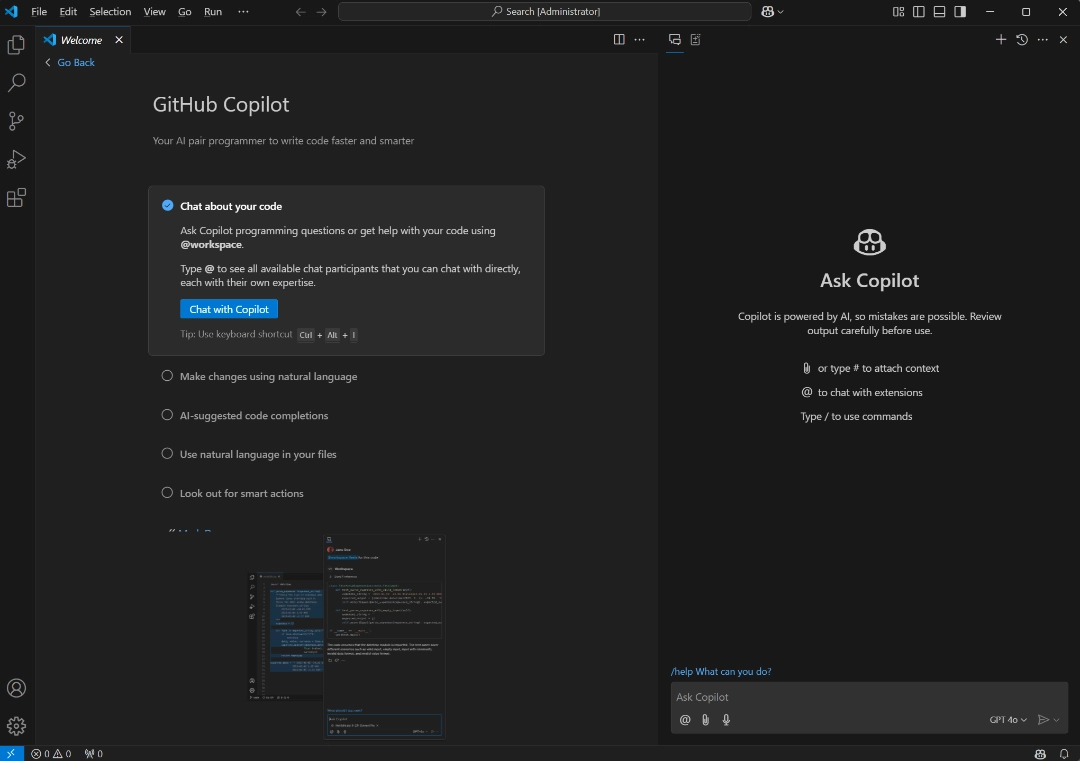

Once the sign-in is successful, you will see GitHub Copilot chat light up in the sidebar in Visual Studio Code.

You can try Copilot by asking it questions right there in the chat window, but we still don’t have access to the @vision command. To fix that, we need to download the extension. You can get it from the Visual Studio Marketplace or you can search for copilot vision in the Extensions view and install it that way.

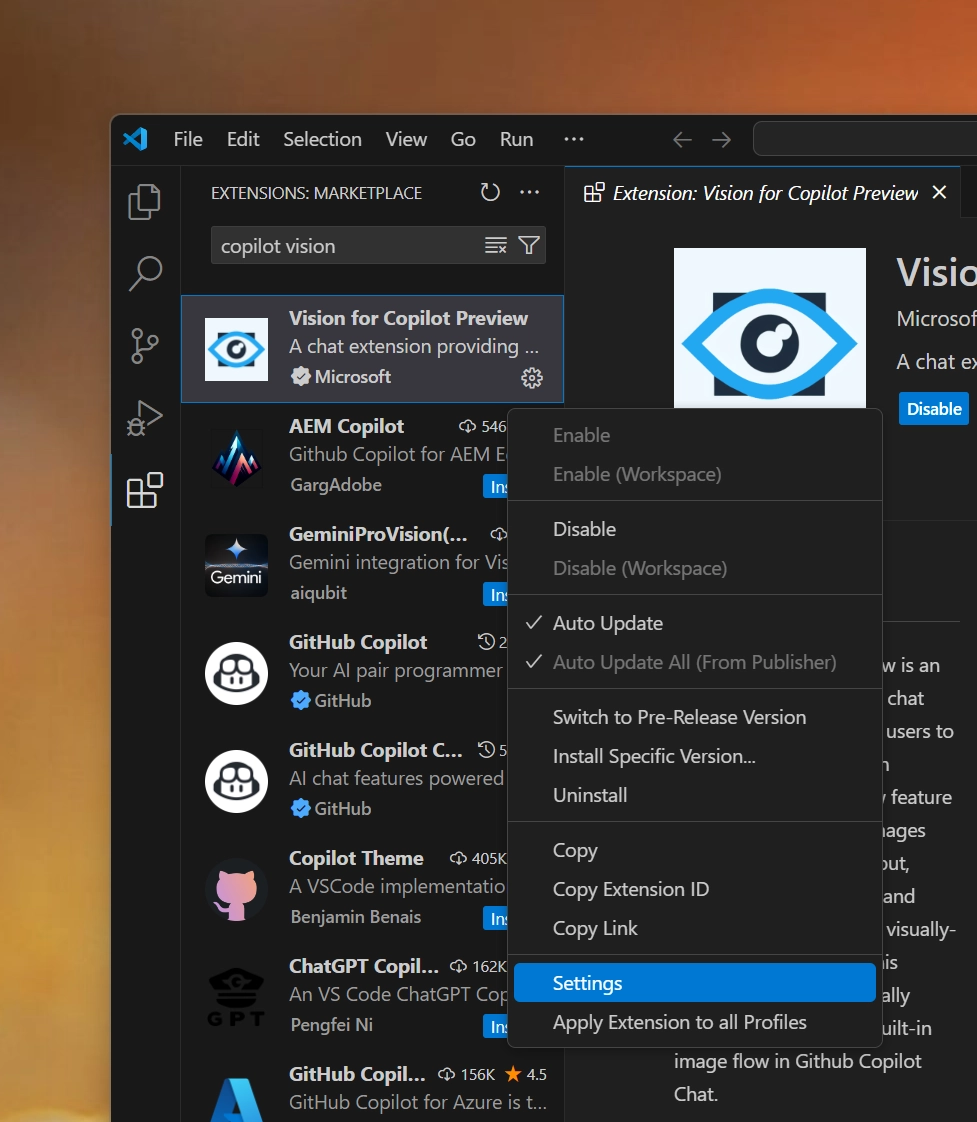

Once the installation is complete, we will now need to adjust some settings, since it won’t quite be ready out-of-the-box at this time (remember - it’s not yet part of the core GitHub Copilot offering). In the list of installed extensions, click on the little gear icon next to Vision for Copilot Preview and select Settings.

Setting up the Vision for Copilot Preview extension #

Now for the fun part - because this is not yet part of the core GitHub Copilot experience, we need to do some setup. You can configure the extension with several AI model providers, such as OpenAI, Anthropic, Google (with Gemini), and of course - Azure OpenAI. I personally prefer to use Azure OpenAI because I already host a lot of stuff there, and setting things up is relatively easy with the Azure CLI.

Before you follow the next steps, make sure that you install Azure CLI for the platform of your choice. Once done, we will create a new resource group to hold all our AI-related resources:

az group create --name CopilotTestRG --location eastus

Next, let’s create the Azure OpenAI resource (this is presented as PowerShell, but you can re-format the command for bash too):

az cognitiveservices account create `

--name CopilotTest-AOAI `

--resource-group CopilotTestRG `

--location eastus `

--kind OpenAI `

--sku s0 `

--subscription YOUR_SUBSCRIPTION_GUID

With the resource created, we now need to deploy a model that we will use with the extension. That can be done with the help of this command (I am relying on gpt-4o):

az cognitiveservices account deployment create `

--name CopilotTest-AOAI `

--resource-group CopilotTestRG `

--deployment-name VisionTestModel `

--model-name gpt-4o `

--model-version '2024-11-20' `

--model-format OpenAI `

--capacity 1 `

--sku GlobalStandard

I promise you we’re getting to where we need to be! Let’s grab the deployment base URL:

az cognitiveservices account show `

--name CopilotTest-AOAI `

--resource-group CopilotTestRG `

--query "properties.endpoint" `

--output tsv

The output of this command will be something like this:

https://eastus.api.cognitive.microsoft.com/

We can then augment it with the full set of details we need for the extension in Visual Studio Code (VisionTestModel is the name of the deployment we specified earlier):

https://eastus.api.cognitive.microsoft.com

/openai

/deployments

/VisionTestModel

/chat

/completions

?api-version=2024-08-01-preview

Hold on - but where is the version coming from? Looking at all the previous commands, the model version was 2024-11-20, so where is 2024-08-01-preview sourced from?

Great catch! The version we’re using here is the REST API version, not the model version. We can get the appropriate version from the API documentation.

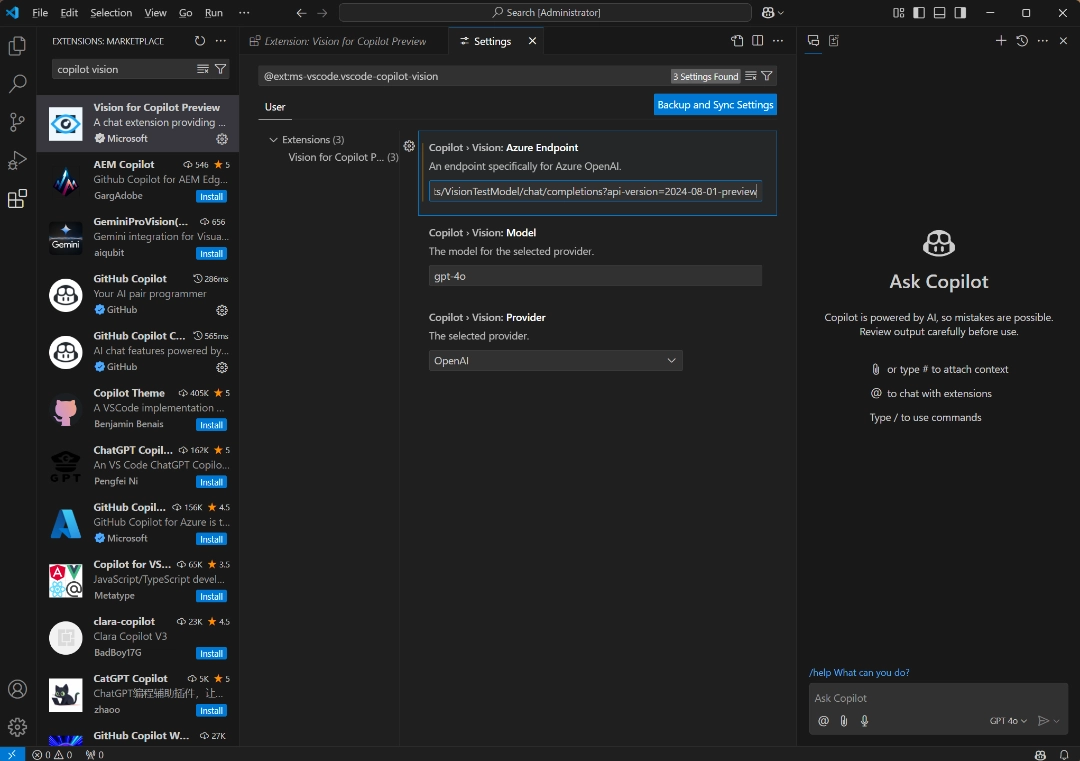

Take the fully-constructed endpoint and set it as Azure Endpoint in the extension settings:

Don’t forget to also set the Provider as Azure OpenAI. Now, we need one last component - the API key that we can use with the extension. We can, once again, use the Azure CLI for that:

az cognitiveservices account keys list `

--name CopilotTest-AOAI `

--resource-group CopilotTestRG `

--query "key1" `

--output tsv | clip

Notice the clip at the end. Piping the output to clip allows me to instantly put the key in the clipboard so that we don’t post private info in the terminal. Keep it handy - we will need it in a second.

Getting the extension to do the heavy lifting for us #

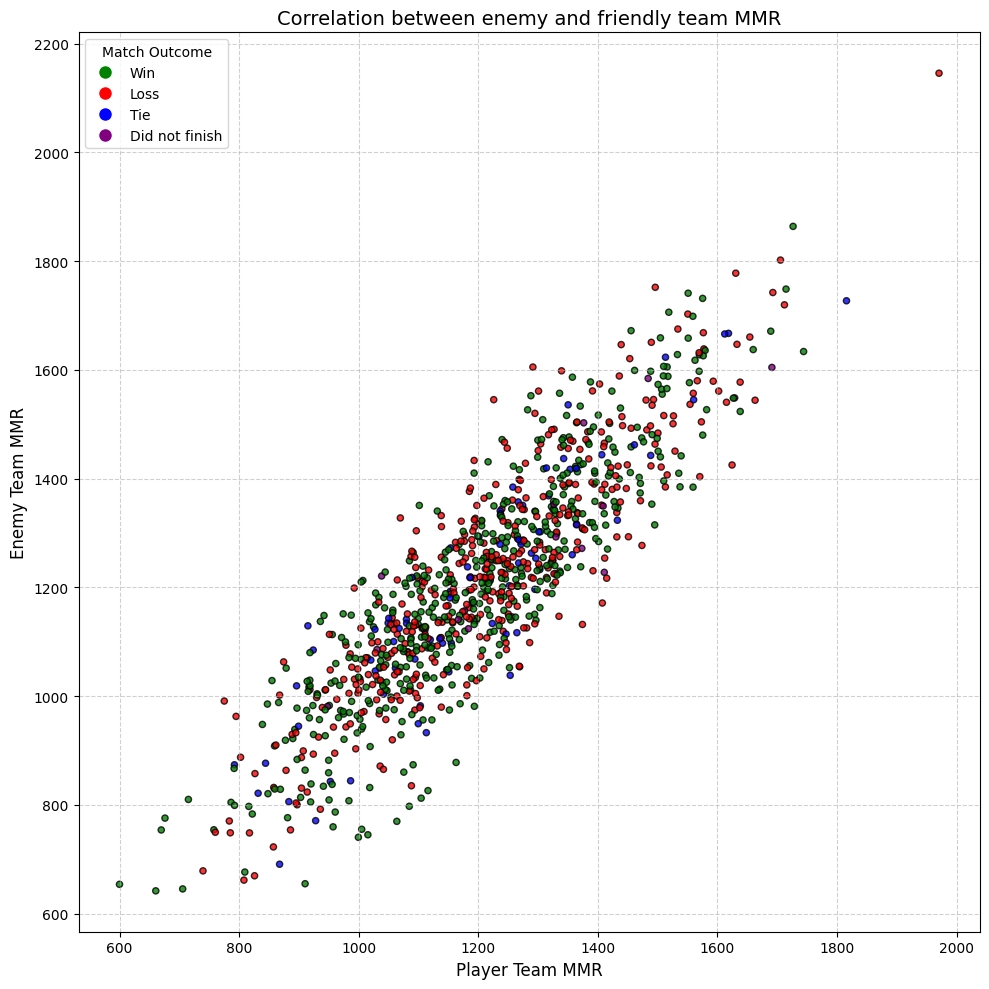

To test the extension capabilities, I will refer to one of my own blog posts from the past year - the one where I analyze the path of getting to Hero in Halo Infinite. I am going to zero in on one particular chart:

I want to see this visualization explained, and then re-created with dummy data that mimics it, because let’s assume that I know almost nothing about how to create this. Let’s copy the image from the browser and paste it in the GitHub Copilot chat view. You will know that this operation was successful when you see the image reference set as the chat context.

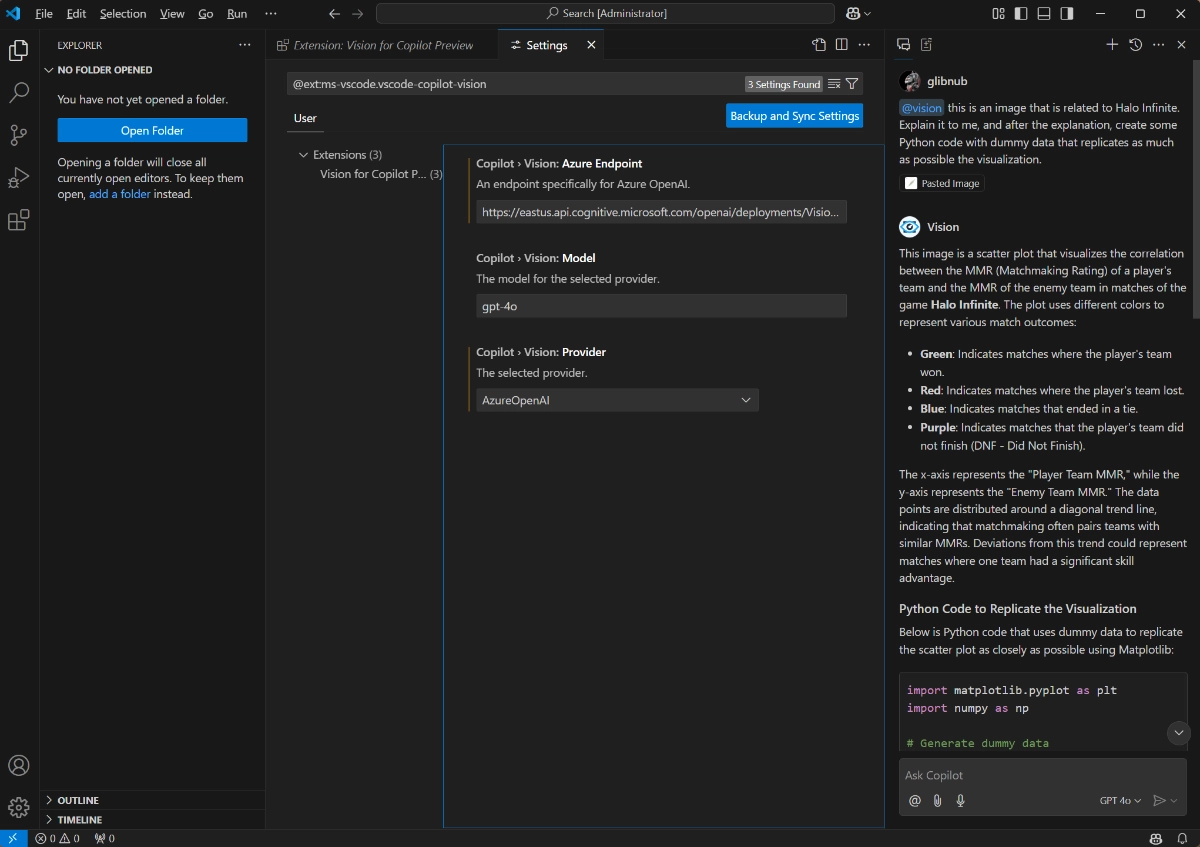

Moment of truth - let’s give GitHub Copilot a prompt, prefixing it with @vision to make sure that the Vision for Copilot kicks in to process our request.

@vision this is an image that is related to Halo Infinite.

Explain it to me, and after the explanation, create some

Python code with dummy data that replicates as much as

possible the visualization.

Once the processing completes, we get a response like this:

Not bad for a rudimentary conclusion. Now let’s take a look at the code. The model gave us this:

import matplotlib.pyplot as plt

import numpy as np

# Generate dummy data

np.random.seed(42)

num_matches = 1000

# Randomly generate MMR values for player and enemy teams

player_team_mmr = np.random.normal(1200, 200, num_matches).clip(600, 2000)

enemy_team_mmr = player_team_mmr + np.random.normal(0, 100, num_matches)

# Randomly assign match outcomes

outcomes = np.random.choice(['Win', 'Loss', 'Tie', 'Did not finish'], size=num_matches, p=[0.5, 0.4, 0.08, 0.02])

# Map outcomes to colors

outcome_colors = {'Win': 'green', 'Loss': 'red', 'Tie': 'blue', 'Did not finish': 'purple'}

colors = [outcome_colors[outcome] for outcome in outcomes]

# Create the scatter plot

plt.figure(figsize=(10, 10))

plt.scatter(player_team_mmr, enemy_team_mmr, c=colors, alpha=0.8, edgecolor='k', s=20)

# Add labels, title, and legend

plt.title("Correlation between enemy and friendly team MMR", fontsize=14)

plt.xlabel("Player Team MMR", fontsize=12)

plt.ylabel("Enemy Team MMR", fontsize=12)

# Create a custom legend

legend_elements = [

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='green', markersize=10, label='Win'),

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='red', markersize=10, label='Loss'),

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='blue', markersize=10, label='Tie'),

plt.Line2D([0], [0], marker='o', color='w', markerfacecolor='purple', markersize=10, label='Did not finish')

]

plt.legend(handles=legend_elements, title="Match Outcome", fontsize=10)

# Display grid

plt.grid(True, linestyle='--', alpha=0.6)

# Show the plot

plt.tight_layout()

plt.show()

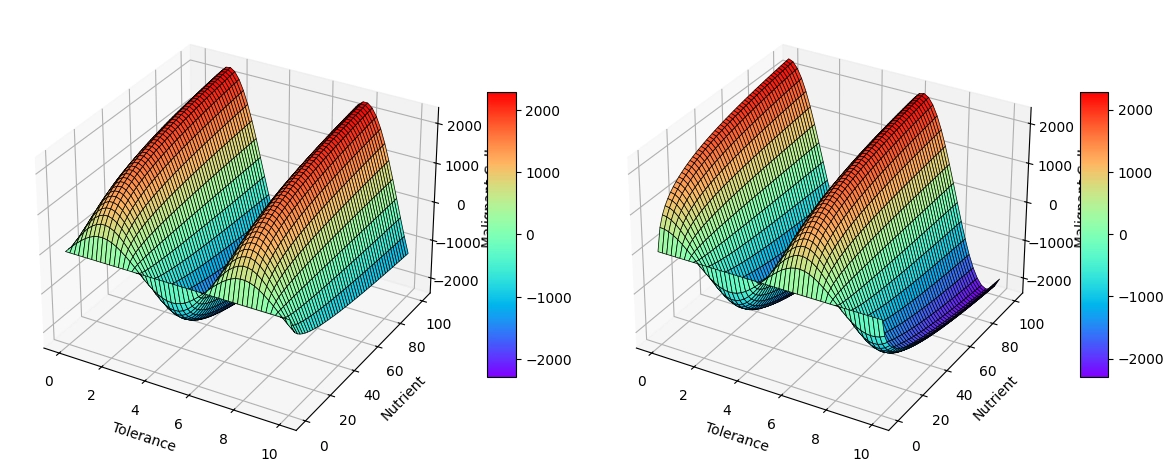

Let’s expand my assumption from above that I at least know how to install Python packages with pip - I see matplotlib and numpy as references, the latter being installed with the former by default. I also installed jupyter to be able to quickly test the code in a notebook. I copied the code, pasted it in a cell, and ran it. I got this:

Compare it to my original scatterplot:

It’s not 100% accurate, but it got me to exactly the result I wanted.

I can also use @vision to ask if there are conclusions that are worth drawing from the data:

@vision this is a graph that was computed based

on Halo Infinite matches. What conclusions

can you draw from it?

The output I got for this prompt:

This graph shows a correlation between the Matchmaking Rating (MMR) of friendly teams (Player Team MMR, x-axis) and enemy teams (Enemy Team MMR, y-axis) in Halo Infinite matches. The data points are color-coded based on match outcomes: Win (green), Loss (red), Tie (blue), and Did Not Finish (purple). Here are some conclusions and observations:

- Strong Correlation Between Player Team MMR and Enemy Team MMR. The data points form a strong diagonal trend, indicating that the matchmaking system in Halo Infinite generally pairs teams with similar MMRs. This suggests that the system attempts to create balanced matches by matching teams of relatively equal skill levels.

- Match Outcomes Are Spread Across the MMR Spectrum. Wins (green) and losses (red) are distributed relatively evenly across the MMR range. This indicates that match outcomes are influenced by factors beyond just MMR parity, such as player performance, strategy, or teamwork. However, at the edges of the spectrum (very high or low MMR), there are fewer data points, likely due to fewer players in those skill brackets.

- Slight Asymmetry in Wins and Losses. In some areas, particularly where Player Team MMR is higher than Enemy Team MMR (to the right of the diagonal), green points (Wins) appear more common, while red points (Losses) dominate when Enemy Team MMR is higher. This suggests that teams with higher MMRs are more likely to win against lower-MMR opponents, as expected.

- Ties and “Did Not Finish” Are Rare. Blue points (Ties) and purple points (Did Not Finish) are sparse compared to Wins and Losses. Ties likely occur only under specific circumstances, such as evenly matched teams or game-specific mechanics. “Did Not Finish” outcomes are rare, possibly representing disconnections or forfeits.

- Outliers Exist. There are some outliers where Player Team MMR and Enemy Team MMR are not closely matched: For example, a few matches exist where a team with very low MMR played against a much higher-MMR team (points far from the diagonal). These may represent matchmaking errors or situations where players joined late, uneven teams, or other anomalies.

- Matchmaking Balances Teams Well Even at Higher MMRs. At higher MMRs (e.g., 1600+), the tight clustering around the diagonal indicates that matchmaking continues to balance teams effectively, even at the top skill levels. However, there is still variability in outcomes, showing that skill alone does not guarantee a win.

Conclusion

The graph illustrates that Halo Infinite’s matchmaking system generally succeeds in pairing teams with comparable MMRs, leading to balanced matches. However, outcomes depend on more than just MMR parity, as player performance, teamwork, and in-game dynamics also play significant roles. The presence of outliers and rare outcomes (ties, DNFs) suggests that the matchmaking system is not perfect but functions well for the majority of matches.

If you spent any time playing Halo Infinite, these conclusions will definitely carry weight. @vision is really nice to get that first rudimentary hypothesis about the data.

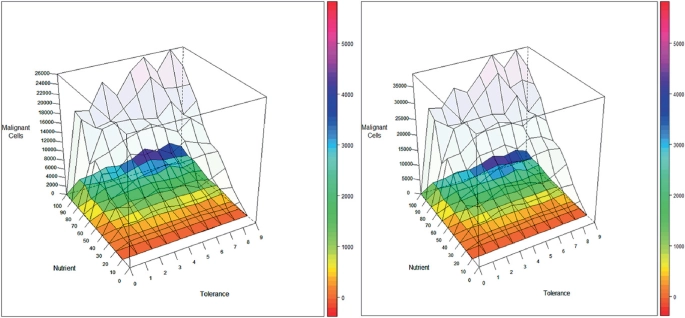

Another use case that I find interesting is quickly giving me insights on how to construct visualizations that I am not super-familiar with. For example, I spotted this 3D plot the other day:

I asked @vision and it provided me this code:

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

# Generate data

tolerance = np.linspace(0, 10, 50) # X-axis

nutrient = np.linspace(0, 100, 50) # Y-axis

tolerance, nutrient = np.meshgrid(tolerance, nutrient)

malignant_cells_1 = np.sin(tolerance) * np.log1p(nutrient) * 500 # Z-axis for plot 1

malignant_cells_2 = np.cos(tolerance) * np.log1p(nutrient) * 500 # Z-axis for plot 2

# Create the figure

fig = plt.figure(figsize=(12, 6))

# Plot 1

ax1 = fig.add_subplot(121, projection='3d')

surf1 = ax1.plot_surface(

tolerance, nutrient, malignant_cells_1, cmap='rainbow', edgecolor='k', linewidth=0.5

)

ax1.set_xlabel('Tolerance')

ax1.set_ylabel('Nutrient')

ax1.set_zlabel('Malignant Cells')

fig.colorbar(surf1, ax=ax1, shrink=0.5, aspect=10)

# Plot 2

ax2 = fig.add_subplot(122, projection='3d')

surf2 = ax2.plot_surface(

tolerance, nutrient, malignant_cells_2, cmap='rainbow', edgecolor='k', linewidth=0.5

)

ax2.set_xlabel('Tolerance')

ax2.set_ylabel('Nutrient')

ax2.set_zlabel('Malignant Cells')

fig.colorbar(surf2, ax=ax2, shrink=0.5, aspect=10)

# Adjust layout and show the plots

plt.tight_layout()

plt.show()

Which built correctly and produced this result:

@vision in GitHub Copilot.This is fantastic - I quickly learned that this is also produced with matplotlib and their mplot3d toolkit (you can read more about it). It produced the data and rendered two 3D surfaces, just like the original image. It saved me time in doing research through unreliable answers and trying to scaffold things myself.

Now, there are imperfections - the graphs are not exactly what the original image used, but 80% of the work was done for me and now I know where to look to fiddle with additional parameters.

Conclusion #

I am genuinely excited to use more and more of @vision, especially in data-related tasks because it is a quick way to do some contextual gut-checks and provide me the relevant scaffolding for all sorts of visuals. But that’s also just my use case. There’s more, like analyzing architecture diagrams and recreating infrastructure with it, or telling me how to re-create certain UI elements in HTML and CSS. It feels like a superpower, almost like talking to a programmer that’s paired with me, who can quickly toss some ideas once I share a concept with them.