Unlocking My Spotify Podcast Data

If you are a podcast owner, one of the things that can be a bit annoying is the multitude of different data points that are available for the show. Now, this is not the fact that there is too much data but rather that this data is scattered across different providers, with different systems, and different ways to manage it.

Apple has their own analytics, Google has an entirely different experience, and Spotify has a dashboard as well (although experience-wise it’s leaps and bounds better than the former two). Nonetheless, as someone that runs a podcast, I got really tired of going to three different pages to get the data I need, so I decided that I will start solving this problem for myself, and hopefully for someone else while at it. My starting point was Spotify, because their API was the least convoluted.

Let’s start with the destination - I wanted to get to a point where I can aggregate Spotify data all in one place, ideally in something like a SQLite database file, that I can then pipe to a Python program in Jupyter notebook for analysis. Generally, I am a big fan of owning my data so that I can process and analyze it in whichever way I want. Not that I expect to get super-detailed insights from the provided aggregated numbers, but it’s better than nothing.

In this blog post, I will outline my approach to accessing my podcast data from the Spotify data service, making it ready for local storage, processing, and analysis.

To get access to the data, I logged in to my Spotify podcaster dashboard and fired up the network inspector. Whichever browser you are using, it should have this integrated - no need to install anything. When you go to the Spotify dashboard, it renders a range of different insights about the audience, podcast downloads, where folks are listening from - all interesting to check out.

Surely, if Spotify can render the pretty charts and graphs, they are running some API calls behind the scenes, that provide the raw numbers. A couple of refreshes later, and I see calls being made to the following URL:

https://generic.wg.spotify.com/podcasters/v0/shows/SHOW_ID/episodes?end=2021-04-20&filter=&page=1&size=50&sortBy=releaseDate&sortOrder=descending&start=2021-04-14

Good starting point with minimal effort. The call returns a nicely-formatted JSON that contains information on my episodes. Is this an openly accessible API, though? Not really - there is an Authorization header embedded in the request. Lucky for me, though, the API is using bearer authentication, meaning that there is a token assigned to the user, and that token grants access to specific parts of the API. How do I get this token? To the network inspector!

https://generic.wg.spotify.com/creator-auth-proxy/v1/web/token?client_id=CLIENT_ID_GOES_HERE

Bingo! The token is obtained through a GET request, that requires two cookie values - sp_dc and sp_key. The request will return a JSON with the token, token type, expiration time-frame, and scope. The client ID seems to be associated either with the web player or the client application that is calling the API, and is a static value.

The returned JSON looks like this:

{

"access_token": "TEST_TOKEN",

"token_type": "Bearer",

"expires_in": 3600,

"scope": "streaming ugc-image-upload user-read-email user-read-private"

}

But now there is the question - how do I automate getting those pesky cookie values to get the token? How far do I need to go to make this process script-worthy? Well, somewhat far. It all starts with a funky endpoint, that seems to be accepting a POST request:

https://accounts.spotify.com/login/password

The username and password are encoded into a form and sent to this endpoint, but due to the fact that I would need to deal with CSRF tokens, this seems a bit counter-productive. What else can I do? Well, I could try using Selenium to get data. The nice thing about using Selenium is the fact that I operate with a working web browser and don’t need to manually re-implement authentication flows.

To get this working, I used Python along with the Edge web driver. You can use other web driver instances here as well, such as Chromium or Firefox. As a proof of concept, I strung along this code:

import selenium.webdriver

import os

from pathlib import Path

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from msedge.selenium_tools import Edge, EdgeOptions

work_root = Path(os.path.dirname(os.path.abspath(__file__))).parent

browser_driver = os.path.join(work_root, 'drivers\\msedgedriver.exe')

print(browser_driver);

options = EdgeOptions()

options.use_chromium = True

driver = Edge(executable_path = browser_driver, options = options)

driver.get(r"https://accounts.spotify.com/en/login?continue=https:%2F%2Fpodcasters.spotify.com%2Fgateway")

WebDriverWait(driver, 40).until(EC.presence_of_element_located((By.XPATH, '//body[contains(@data-qa, "s4-podcasters")]')))

print("Logged in!")

print(driver.get_cookies())

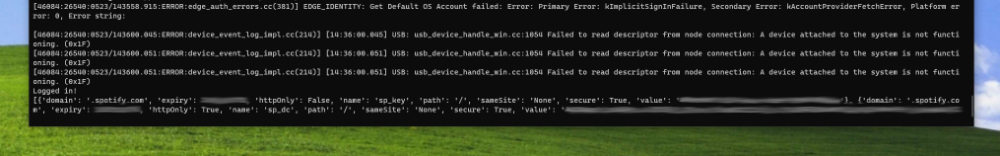

The purpose of the snippet above is pretty simple - get the web driver binary from a local folder, that is relative to my Python script. Then, run the web driver and point it to the Spotify authentication page, with a redirect to the podcasting portal as a target of a successful log in. And last - once the script detects that the log in is successful through the presence of a body element with a specific attribute, get the cookies. When the script is executed and I get to log in, I see this in the terminal:

Well look at that - we have sp_dc and sp_key, with a generous one year expiry time-frame! This should give me plenty of room to experiment, and I can even grab and store the values locally - it only takes a couple of lines of Python code:

cookie_container = {

"sp_dc": driver.get_cookie("sp_dc")["value"],

"sp_key": driver.get_cookie("sp_key")["value"],

}

with open('_sc.json', 'w') as cookie_file:

json.dump(cookie_container, cookie_file)

With the content stored in a file, I can now write a utility that, on launch, acquires the token, and then proceeds to collect all the relevant data. With the cookie stored in _sc.json, all it takes to generate the bearer token is having a helper class with two functions - get_local_tokens, that will read the locally stored information for token production, and get_bearer_token, that will exchange the cookie data for an actual token that I can use to execute my requests:

import json

import requests

class TokenHelper:

def get_bearer_token(cookie_data, client_id):

url = f'https://generic.wg.spotify.com/creator-auth-proxy/v1/web/token?client_id={client_id}'

payload={}

headers = {

'Cookie': f'sp_dc={cookie_data["sp_dc"]};sp_key={cookie_data["sp_key"]}'

}

response = requests.request("GET", url, headers=headers, data=payload)

return json.loads(response.text)

def get_local_tokens():

cookie_data = {}

client_id = ''

# Load JSON with cookie data

with open('_sc.json') as cookie_file:

cookie_data = json.load(cookie_file)

# Load client ID data

with open('_cid.txt') as client_id_file:

client_id = client_id_file.read()

return cookie_data, client_id

The get_local_tokens function reads in the cookie file (_sc.json) that we generated earlier, along with a text file that contains the client ID (_cid.txt) that can be obtained through your browser’s network inspector for Spotify requests. An alternative architecture here would be having a config file that stores all the important information, but for simplicity and experimentation purposes, I am keeping them separate for now.

get_bearer_token issues a request to the Spotify service to produce a new token based on cookie values and the client ID that I read from the aforementioned local file.

In my main application, I can now call the token functions as such:

from spdc.helpers import TokenHelper as th

cookie_data, client_id = th.TokenHelper.get_local_tokens()

bearer_token_data = th.TokenHelper.get_bearer_token(cookie_data, client_id)

This implementation covers the essentials, and now I can try and implement a wrapper around the Spotify podcast data API. For example, I started with the data ingestion function for episode data, that looks like this:

import json

import requests

class DataHelper:

def get_episode_data(show_id, token, start, end, page, size, sort_by, sort_order, query_filter):

url = f'https://generic.wg.spotify.com/podcasters/v0/shows/{show_id}/episodes?end={end}&filter={query_filter}&page={page}&size={size}&sortBy={sort_by}&sortOrder={sort_order}&start={start}'

payload={}

headers = {

'Authorization': f'Bearer {token}'

}

response = requests.request("GET", url, headers=headers, data=payload)

return json.loads(response.text)

Once the token is there, all it takes to find the right API endpoints is inspecting the traffic as I navigate through the Spotify catalog pages. Most of the requests will be quite repetitive, so it’s just nice to formalize them in a function call, so that I can refer to them from the main application as such:

episode_data = dh.DataHelper.get_episode_data(show_id=get_show_id(),

token=bearer_token_data['access_token'], start='2021-05-16', end='2021-05-22', page='1',

size='50', sort_by='releaseDate', sort_order='descending', query_filter='')

print(episode_data)

That’s it! I can now get the Spotify podcast data locally, and store the JSON in whichever way I want. Analysis will be a bit more interesting, but that’s for a future post on the subject.