Pulling Ubnt Stats Locally

I love looking at my Ubnt graphs - how much traffic goes where, to what clients, and many other interesting indicators. But I am also the kind of person that loves having raw access to the data, so I started digging - how can I pull those statistics locally?

The setup I run is described here - I have a cloud key that manages the network, composed of a router acting as a switch, access point and the security gateway. So, when I am thinking about the data aggregated, I think of the cloud key, that stores it. Some more digging points that the data is likely managed by a MongoDB instance - that should be easy.

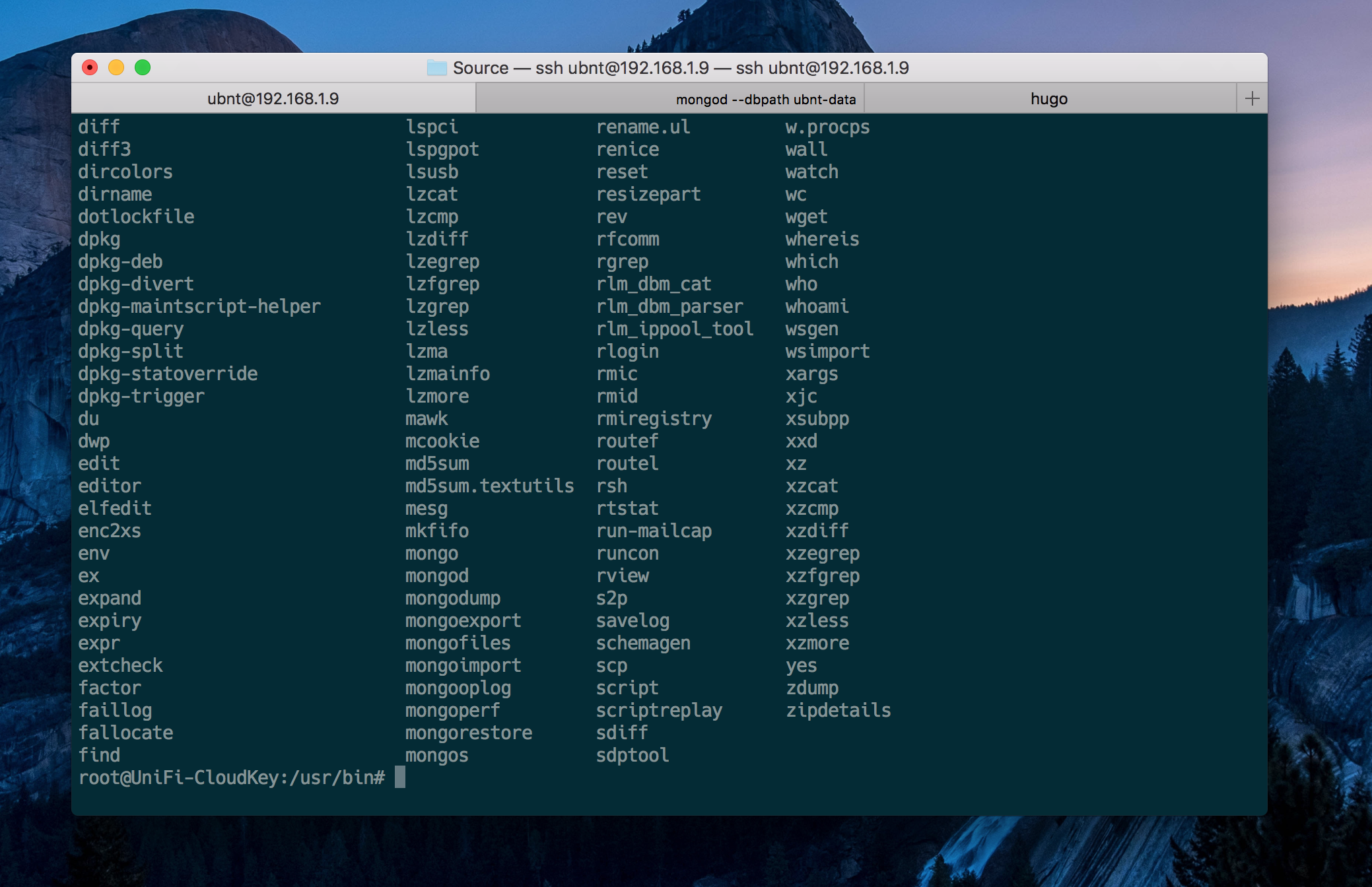

To see what I can dig up, I SSH into the cloud key:

Going through /usr/bin reveals what we would expect:

This means that we can also easily dump data out. The MongoDB instance is running on port 27117 and the database we are looking for is called ace_stat:

/usr/bin/mongodump -d ace_stat -o /home/datadump -port 27117

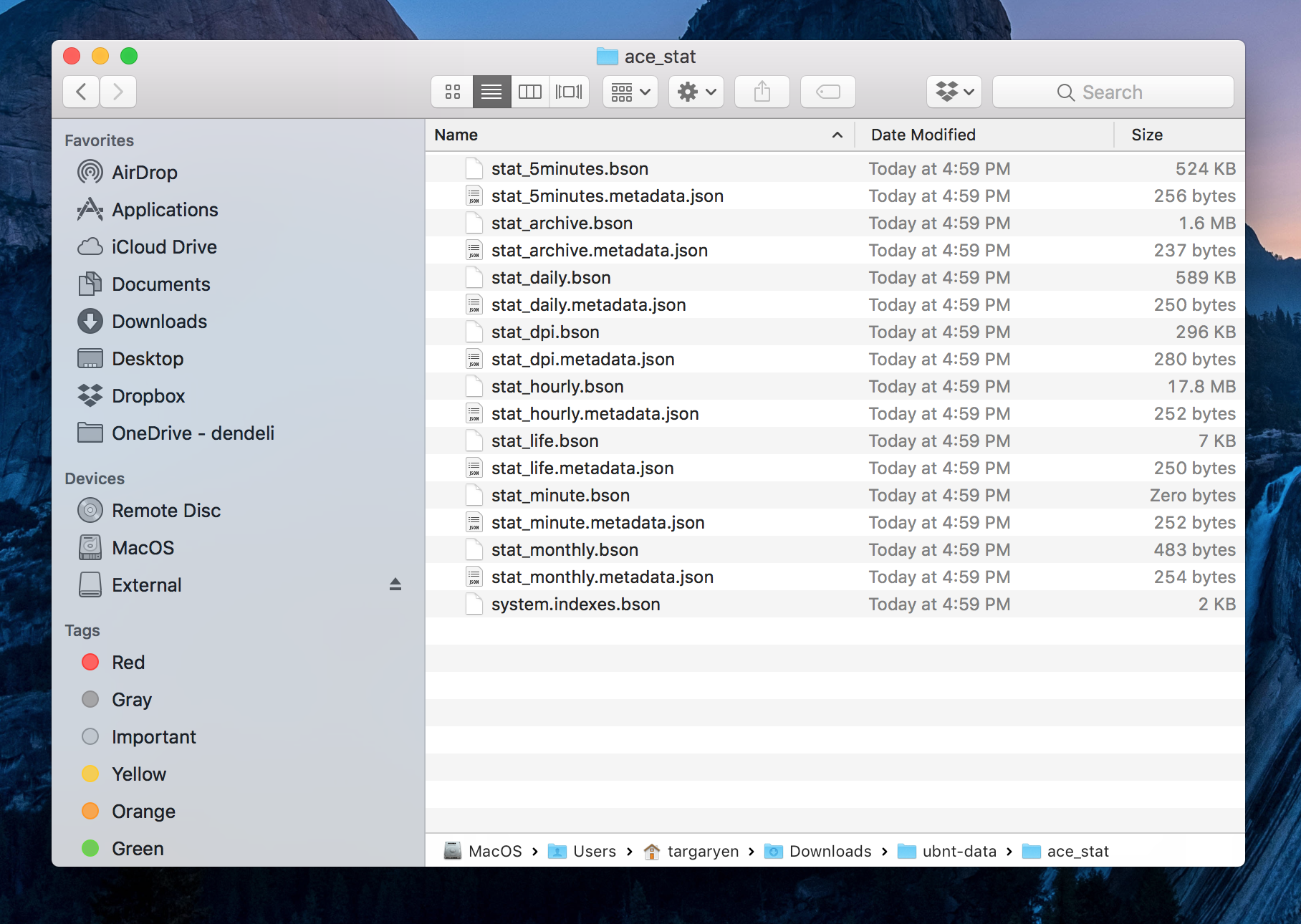

If all goes well, now you should have the data dump ready on the key. You now can export it to the local machine (the one you SSH-d out of):

scp -r [email protected]:/home/datadump /Users/targaryen/Downloads/ubnt-data

You should now have the complete data dump locally:

Time to view the data locally! For that, make sure that you install MongoDB first on your local computer. Instructions may vary depending on the platform, so I will leave it up to you to follow the right set of steps from official documentation.

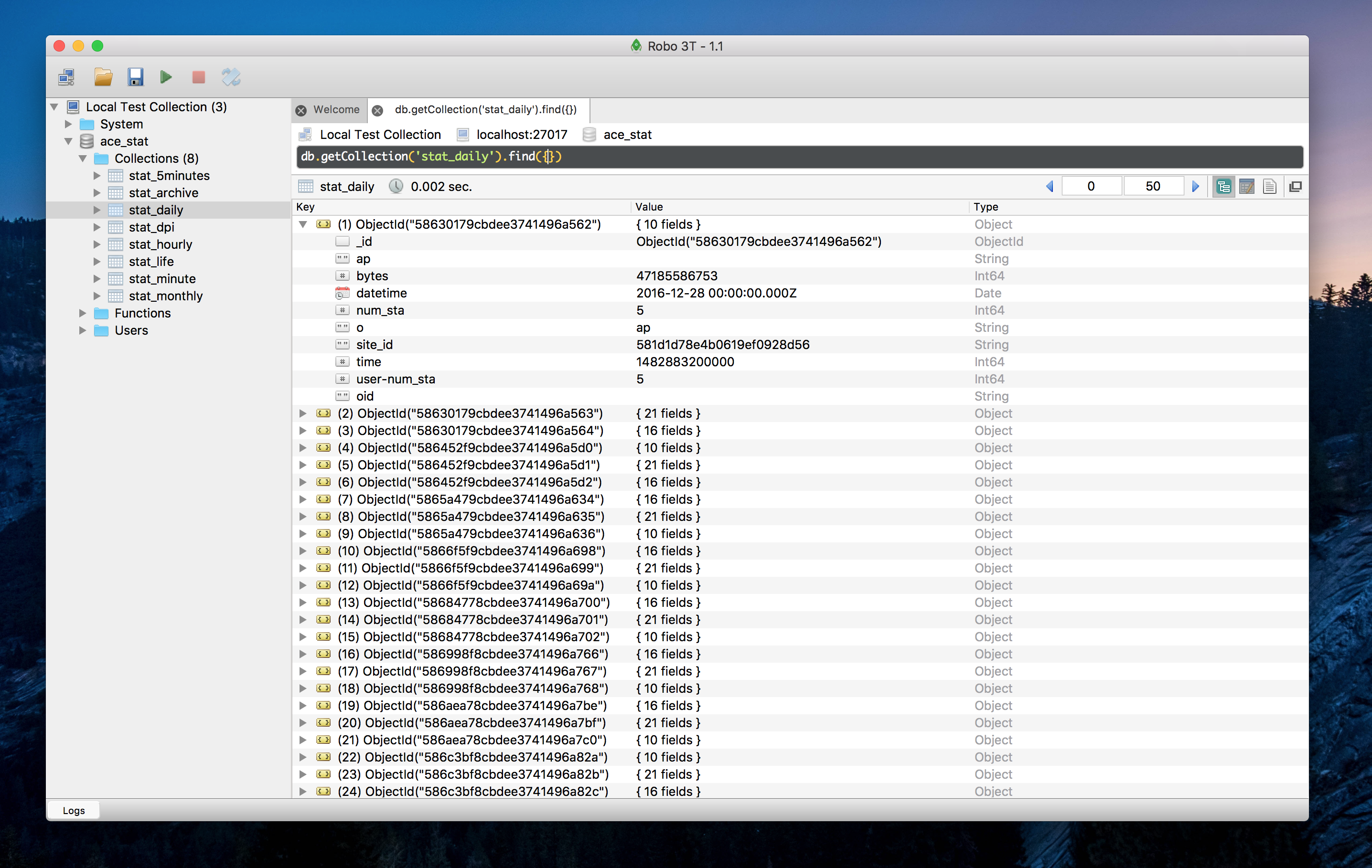

I like to have a GUI for my data, so given that I am working on a Mac, I downloaded and installed Robo3T.

To import the data, make sure that the MongoDB server is running (typically can be done by running mongod --dbath {PATH_TO_SOME_DATA_DIRECTORY}). Once it’s up, just run the import command:

mongorestore -d ace_stat ubnt-data/ace_stat

You are specifying the new database, and the source of the backup. Now, when you go to a tool, like Robo3T, you can see all the stat data, in the ace_stat database:

Now you are in possession of the data snapshot! Next on my TODO list is to create a scheduled job that will pull the data, re-format it in a more consumable format (e.g. CSV) and plug it into an analytics platform.

Cheers!